Their sharp disagreement about precedent reflects different worldviews that go far beyond abortion.

By

Myron Magnet

May 22, 2019 6:53 p.m. ET

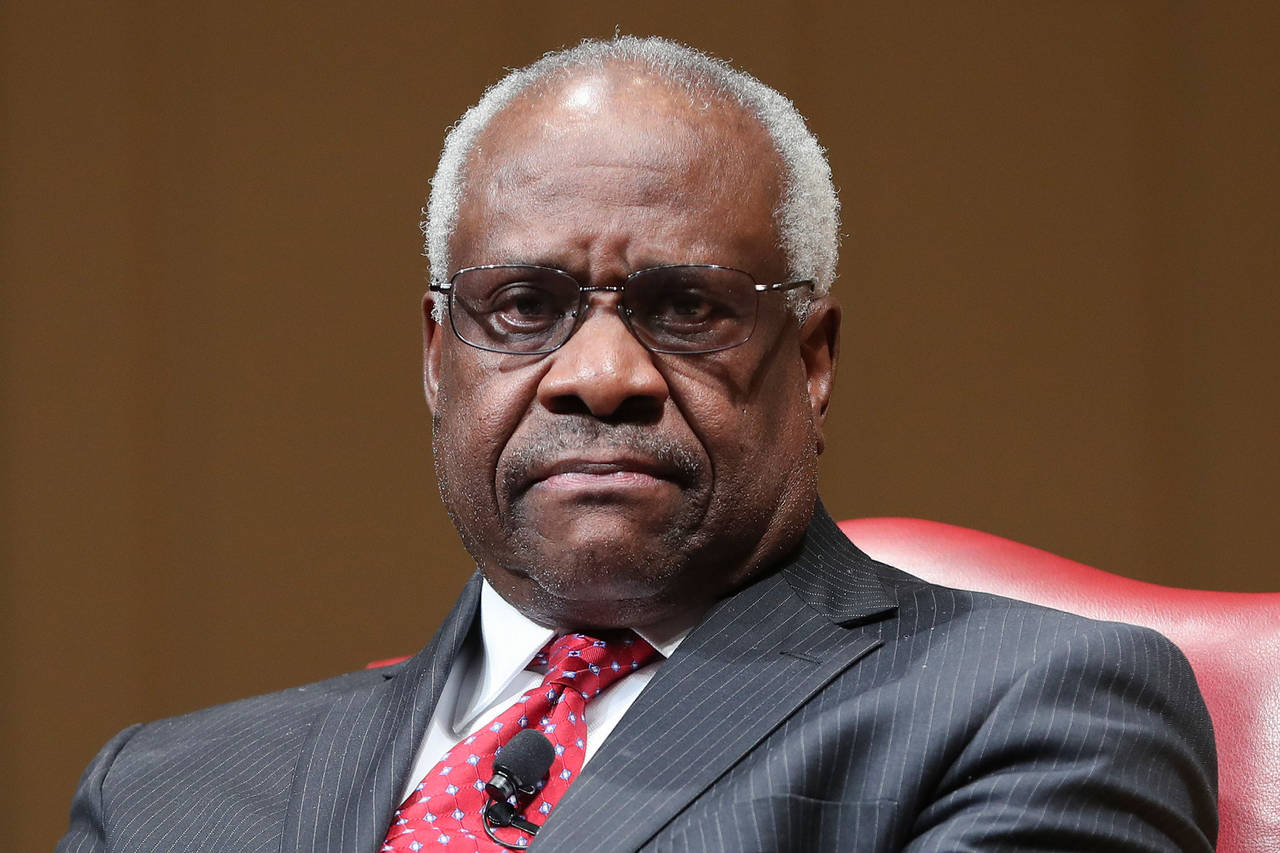

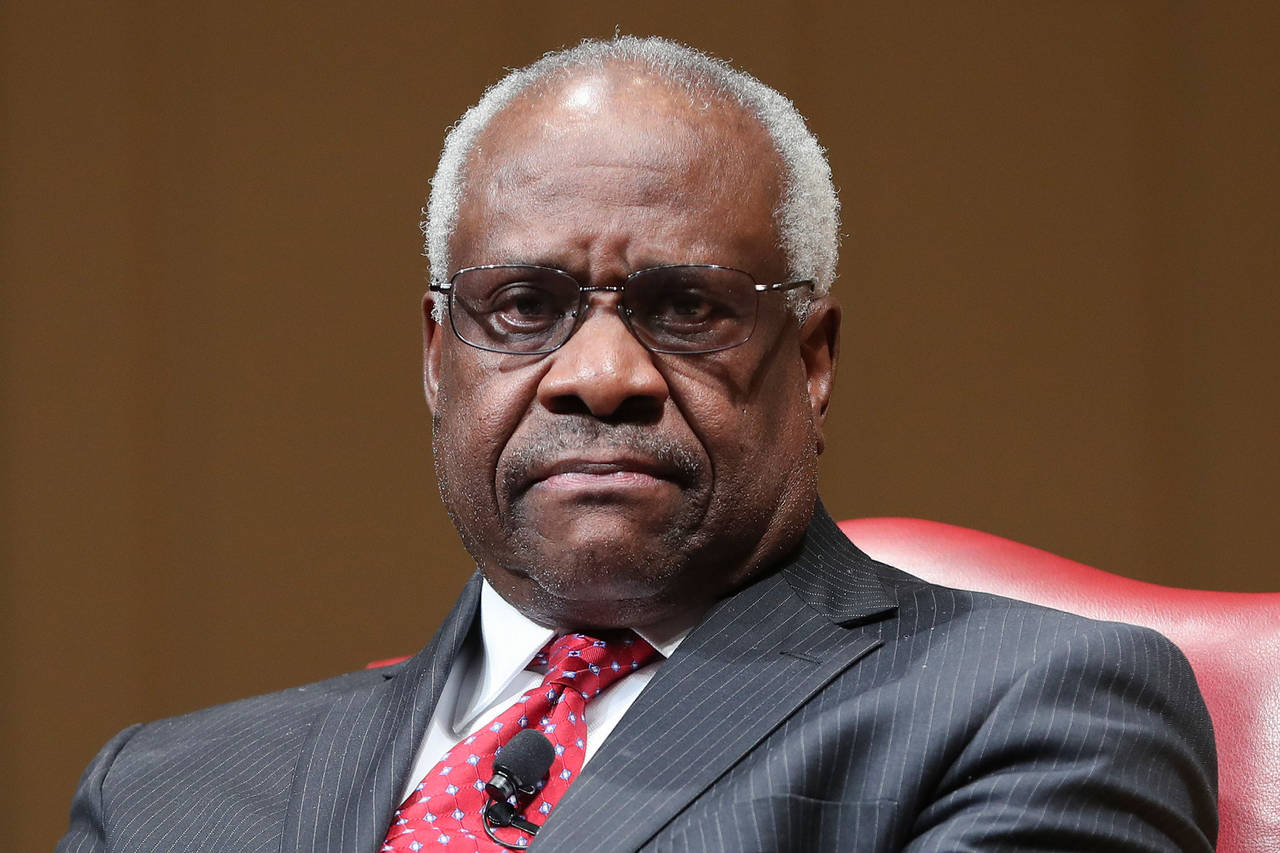

Justice Clarence Thomas in Washington, Feb. 15, 2018. PHOTO: PABLO MARTINEZ MONSIVAIS/ASSOCIATED PRESS

Justice Stephen Breyer lamented last week that the Supreme Court had overturned “a well-reasoned decision that has caused no serious practical problems in the four decades since we decided it.” Dissenting from Justice Clarence Thomas’s majority decision in Franchise Tax Board v. Hyatt, Justice Breyer added: “Today’s decision can only cause one to wonder which cases the Court will overrule next.”

Court watchers assumed the two justices were arguing about abortion, although the case had nothing to do with that issue. But the clash over stare decisis—the doctrine that courts must respect precedent as binding—runs far deeper. It is a manifestation of the crisis of legitimacy that has split Americans into two increasingly hostile camps.

On Justice Thomas’s side is the belief that the government’s authority rests on the written Constitution. This view regards a self-governing republic—designed to protect the individual’s right to pursue his own happiness in his own way, in his family and local community—as the most just and up-to-date form of government ever imagined, even 232 years after the Constitutional Convention.

Justice Breyer, by contrast, assumes America is rightly governed by a “living Constitution,” which evolves by judicial decree to meet modernity’s fast-changing conditions. Judges make up law “with boldness and a touch of audacity,” as Woodrow Wilson put it, rather than merely interpreting a Constitution he thought obsolete.

Wilson also established a corps of supposedly expert, nonpartisan administrators in such agencies as the Interstate Commerce Commission and the Federal Trade Commission, to make rules like a legislature, carry them out like an executive, and adjudicate and punish infractions of them like a judiciary. Wilson and Franklin D. Roosevelt, who supersized this system, considered it the cutting edge of modernity in the protection it afforded workers and the disadvantaged. Call it the Fairness Party, as distinct from Justice Thomas’s Freedom Party.

The Freedom Party does not view the rule by decrees of unelected officials, however enlightened, as an advance over democratic self-government. If the framers had wanted such a system, they could have stuck with the unwritten British constitution, which had governed the American colonists for 150 years and evolves by judicial precedent. They wanted a written constitution, strictly limiting federal authority, because they knew that human nature’s inborn selfishness and aggression not only make government necessary but also lead government officials to abuse their power if not restrained.

U.S. history justifies the framers’ caution, as Justice Thomas has argued in hundreds of opinions since joining the court in 1991. At crucial junctures, the Supreme Court has twisted the Constitution that guarantees liberty toward government oppression.

Start with The Slaughter-House Cases (1873) and U.S. v. Cruikshank(1876), which blew away the protection of the Bill of Rights with which the 14th Amendment’s framers and ratifiers thought they had clothed freed slaves against depredations by state governments. The result was 90 years of Jim Crow tyranny in the South. “I have a personal interest in this,” Justice Thomas once said. “I lived under segregation.” He grew up in 1950s Savannah, Ga., where the law forbade him to drink out of this fountain or walk across that park. If the Fairness Party thinks Supreme Court distortions can twist only to the left, it should think again. Far better to stick to the original meaning, as Justice Thomas urges.

Look what happened when the court allowed Congress and the president to proliferate administrative agencies with no political accountability. The justices have “overseen and sanctioned the growth of an administrative system that concentrates the power to make laws and the power to enforce them in the hands of a vast and unaccountable administrative apparatus that finds no comfortable home in our constitutional structure,” Justice Thomas wrote in a 2015 opinion, the first of a series that argued for reining in the administrative state.

Such lawless power ends in tyranny, as in the case of Joseph Robertson. As these pages recently reported, the Montana rancher dug two ponds fed by a trickle that ran down his mountain acres, only to be prosecuted and imprisoned for polluting “navigable waterways,” as absurdly defined by bureaucrats at the Environmental Protection Agency.

Beginning with the Warren Court in the 1950s, bold and audacious justices began making up law out of the Constitution’s “emanations, formed by penumbras”—literally, gas and shadows. As Justice Thomas has objected, the court invented rights that sharply curtailed the traditional order-keeping authority of police and teachers, making streets, schools, and housing projects in poor neighborhoods dangerous, and depriving mostly minority citizens of the first civil right—to be safe. The justices have even trampled the Bill of Rights, sanctioning campaign-finance laws that curtail the political speech at the core of First Amendment protections.

It’s as if the Court respects no limits. Thus the hallmark of Justice Thomas’s jurisprudence is his willingness to overturn prior decisions when he thinks his predecessors have construed the Constitution incorrectly. The justices readily overturn unconstitutional laws passed by a duly elected Congress. Why be more tender toward judicial errors?

“Stare decisis is not an inexorable command,” Justice Thomas observes in Hyatt. He has said elsewhere: “I think that the Constitution itself, the written document, is the ultimate stare decisis.” Justice Breyer asks which cases the court will overrule next. Justice Thomas’s reasonable answer: Whichever ones go against the Constitution.

Mr. Magnet is editor-at-large of the Manhattan Institute’s City Journal, a National Humanities Medal laureate and author of “Clarence Thomas and the Lost Constitution.”